EmoTourDB — is the first of its kind corpus of Emotionally Labelled Multimodal Touristic Behavior.

Consisting of spontaneous data recorded in real conditions, it is a unique collection that describes the behavior of participants in terms of conscious and unconscious cues, covering audio, video and physiological features as well as the visible surroundings.

Location

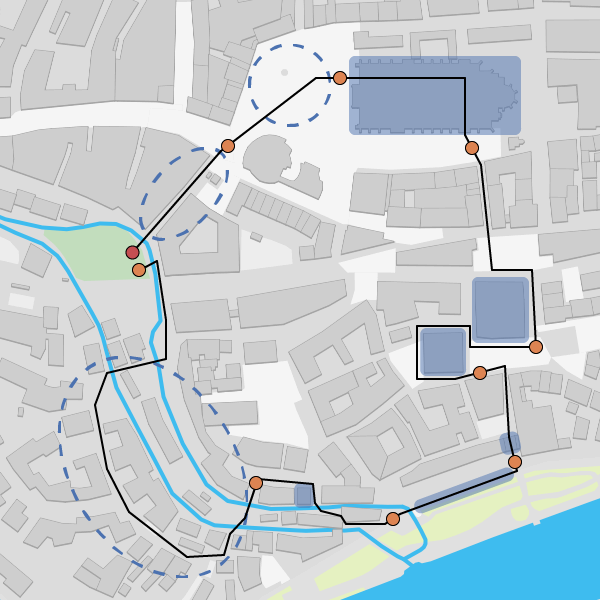

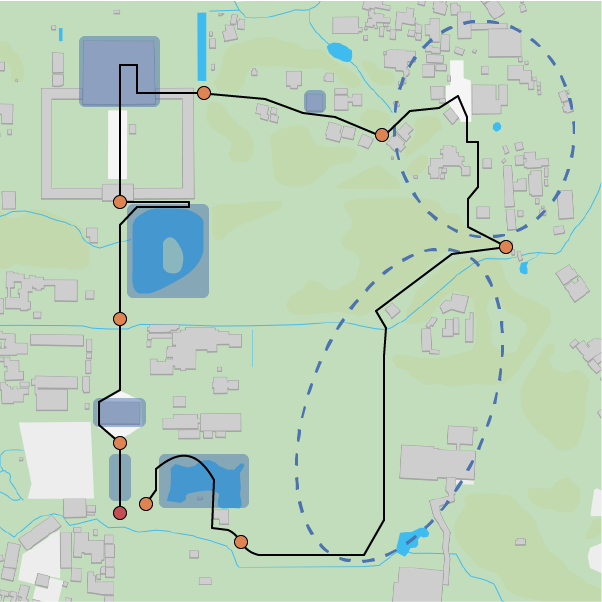

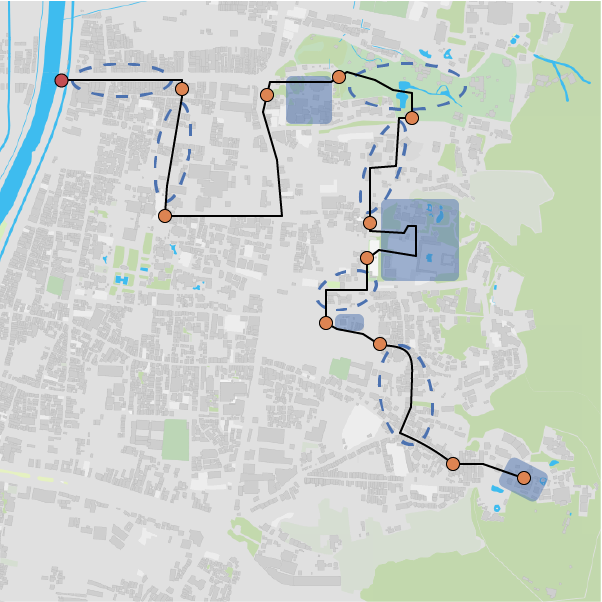

The corpus contains data of 47 participants from 12 countries, recorded in historical areas of three different cities: Nara (Japan), Ulm (Germany) and Kyoto (Japan). Participants followed the predefined touristic route, having freedom regarding time and actions during the experiments, which corresponds to closest to in-the-wild conditions.

© OpenStreetMap contributors. Tile: OSM SE hydda base.

Devices

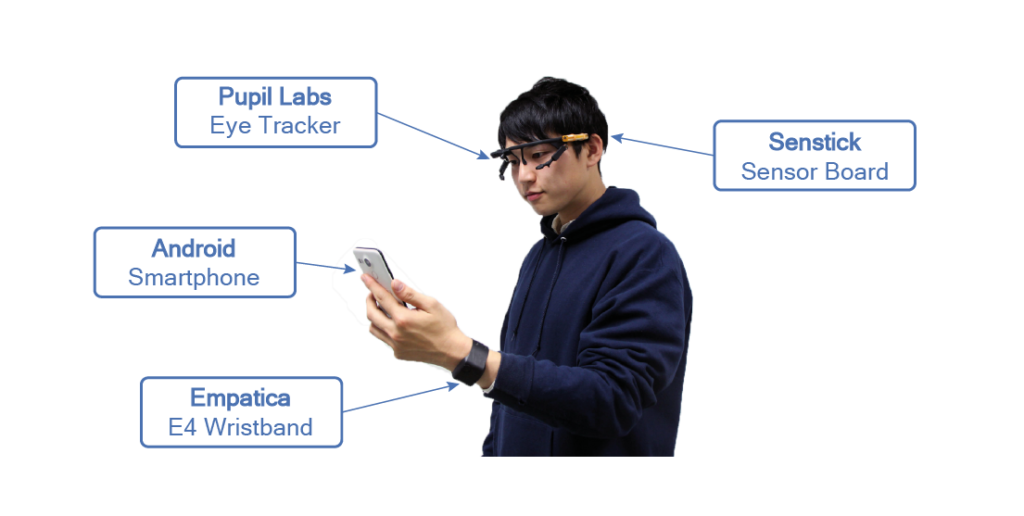

In order to cover the most information on participant’s behavior, we used the following devices:

- Pupil Labs Eye Tracker with infrared eye cameras and color world camera;

- SenStick Sensor Board collecting data on head and body movements, as well as environmental data (temperature, humidity, atmospheric pressure, illumination, magnetic field and UV level), operating through the iOS application;

- Emparica E4 Wristband measuring physiological signals such as photoplethysmography (PPG), heart rate (BPM), electro-dermal activity (EDA) and body skin temperature, and operating through the iOS application;

- Android Smartphone tracking GPS data and recording feedback from participants in a form of short audio-video clips taken with frontal camera as well as labels through specially designed application.

Features

Collected data are presented in our corpus in a raw form as well as used for further feature extraction in order to provide the researchers with a good starting feature set to work with.

Audio. We analyze feedback recordings and use open-source toolkit openSMILE to extract 130 low-level descriptors (Interspeech ComParE 2014 configuration) and 88 expert knowledge based features comprising eGeMAPS set.

Video. We extract two types of features from video data, each from one separate data source. For feedback recordings we use open-source software OpenFace in order to extract Action Units, those describe facial muscle movements in accordance with Facial Action Coding System. For world camera recording we use TensorFlow Object Detection API to describe surroundings of a participant.

Body movements. We use data from accelerometers of SenStick and Android Smartphone to detect head tilts and extract footsteps based features

Eye movements. Having the data on eye movement from Pupil Eye Tracker, we calculate several statistical features based on spherical coordinates of a pupil.

Physiological data. We use the data collected with Emptatica’s application as-is and provide them in this form to the researchers.

Labels

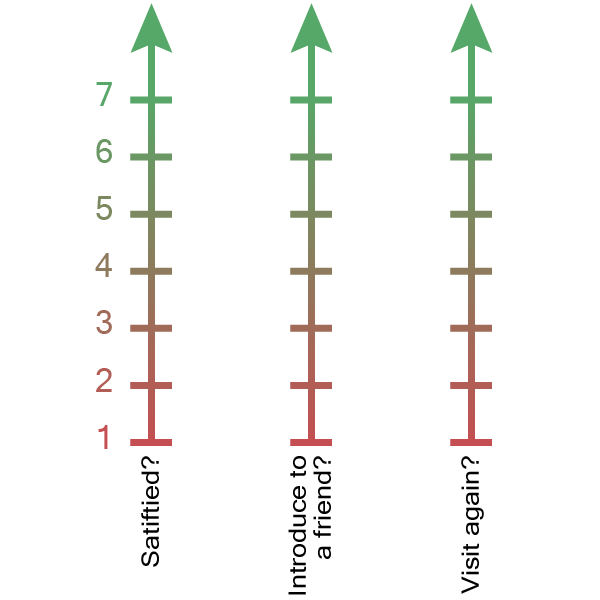

We asked participants to rate their feelings and emotional status using several scales, in order to get a comprehensive estimation.

Satisfaction. We use 7-point Likert scale to measure satisfaction in three different definitions: (i) how satisfied are you with this sightseeing spot?; (ii) do you want to introduce this sight to another person?; (iii) do you want to visit this sight again?

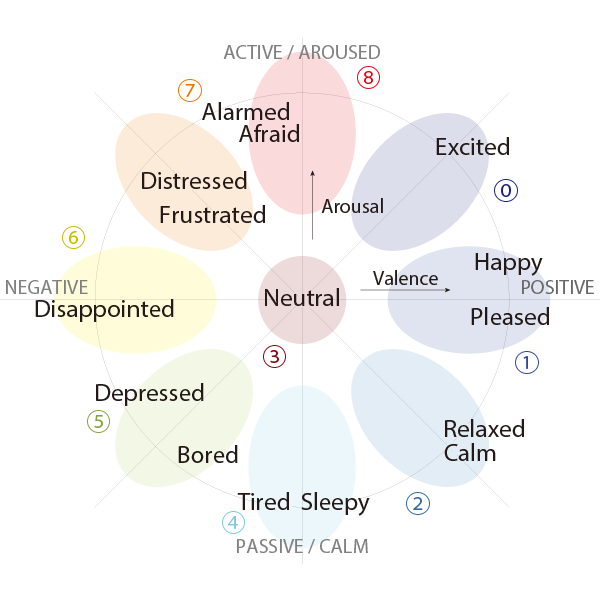

Emotions. We adapt the Russell’s circumplex model of affect and use nine emotional clusters, covering the feelings, those possible to occur during the sightseeing tour and are easy to transfer to dimensional values (arousal and valence): excited, pleased / happy, calm / relaxed, neutral, sleepy / tired, bored / depressed, disappointed, distressed / frustrated, afraid / alarmed.

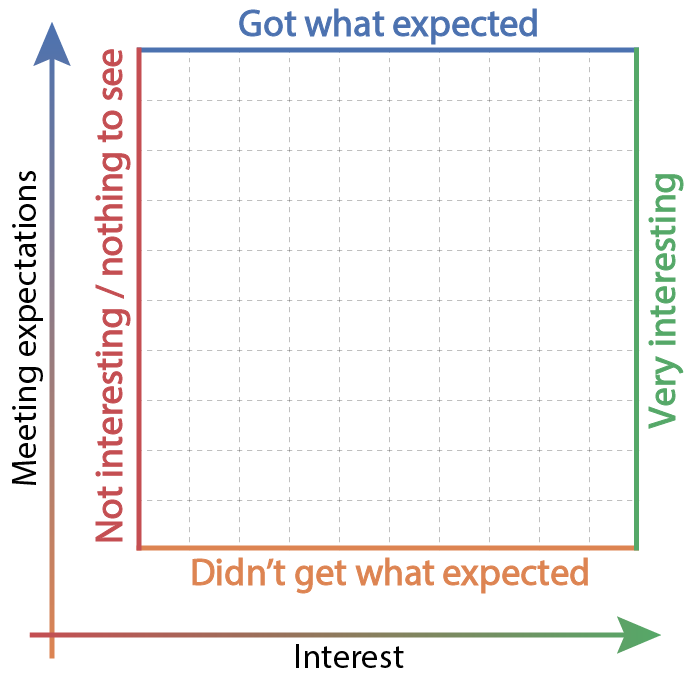

Touristic experience quality. To have more relevant system for touristic experience rating, we have developed a 2-dimensional evaluation space called Touristic Experience Quality (TEQ). Dimensions of TEQ plot represent interest and meeting expectations and contain emotional states listed above, as well as provide more flexibility in selecting intermediate states or intensity of a particular emotion. The dimensions of TEQ were defined according to the feedback of participants given after the experiment, hence this labelling is done for the third experimental part (Kyoto, Japan) only.

Sightseeing profile and personality surveys. To ensure high flexibility of recognition system, we collect additional data on participants, including their touristic experience and personality traits.

Availability

The corpus is free to use for academic non-commercial purposes. In order to get access to it, the End-User License Agreement (EULA) should be filled out and signed by a researcher with a permanent position in an academic institution; and a scan of it should be sent to Yuki Matsuda and Dmitrii Fedotov. Data sample is available here.

Most comprehensive paper

Yuki Matsuda, Dmitrii Fedotov, Yuta Takahashi, Yutaka Arakawa, Keiichi Yasumoto and Wolfgang Minker: “EmoTour: Estimating Emotion and Satisfaction of Users Based on Behavioral Cues and Audiovisual Data,” Sensors 2018, Vol. 18, No.11:3978, 2018.

Impact Factor: 3.031 (2018)